Ten Tips for Starting Test Automation – Part 1/2

In modern software development the need of test automation is evident. Latest development trends such as Continuous Delivery and DevOps aim towards ability for fast and iterative software releases, and automation of the development and release pipeline from developer workstation all the way to the production deployment. All this sets major challenges for testing and QA practices needed to ensure product quality. When release cycles are shortened from months to weeks and days, testing every release by hand is no longer a viable option. Even the basic regression testing of unchanged functionality can easily exhaust available testers, thus risking the release schedules and overall product quality.

Luckily, getting started with test automation is easy. There are numerous automation tools and frameworks and plenty of material available on the internet to get started. With these even testers with no previous experience on test automation development can get their first tests up and running relatively quickly.

However, what starts as a small and easy automation project tends to grow and become more complicated. As time goes by, new tests will be added, the need to support various product variants and versions becomes evident, test environments and tools change, people leave and join the project, and bunch of other unforeseen changes will happen. Without planning and clear focus automation projects can easily get bloated and side-tracked leading to exhaustive maintenance burden that will eventually reduce the benefits gained from the automation.

This two-part post will give ten pointers for getting started and keeping your automation projects on the right track. The list is not complete, but highlights common culprits and challenges observed in many automation projects.

Automation is a tool for testing, not a replacement

The common misunderstanding across the IT industry is that all testing should be automated. In reality there is no 100% test automation – or at least it will not be cost-effective.

Automated tests are merely checks that ensure software works as it was expected. As such, automation is an excellent tool for checking that nothing was broken when new product features were added and that new features work according to the specification. However, in practice it doesn’t make sense to check every imaginable detail of the product as it would eventually make your test suite unmaintainable. Automation also provides very limited or no support when it comes to identifying unknowns and unspecified product behaviour.

Gaining understanding whether the developed features really fulfil your customer needs in given context and constraints still requires plenty of human thinking, communication, collaboration and exploration. Create automation that enables testers to focus on the essentials instead of doing repetitive tasks that are well suited for automation.

Your tests are a product

Many companies have well-defined processes and practices for developing quality software products, but may lack nearly all quality control when it comes down to developing and maintaining test assets. Test assets may be also developed and maintained by testers with limited software development and coding experience compared to full-time software developers.

Although tests are not typically delivered to your customers with product releases, the lifecycle of your tests can be as long as the lifecycle of your products. Tests written today may be used for years after product delivery to ensure future maintenance updates and releases.

To ensure your test assets are reliable, maintainable and easily extendable, good software development practices should be enforced. Also put focus on selecting right the automation tools and framework supporting your testing needs.

A few things to think about in test asset development:

- Version control, branching, tagging, linking tests to product versions.

- Uniform and consistent coding convention.

- Self-describing test code naming (classes, methods, variables, commits).

- Configurability vs. hard-coded values and magic numbers.

- Quality practices & learning: Code reviews, pair- and mob programming.

There is no “I” in “automation”

The value of any testing is on providing information about the product to stakeholders. That in mind, automation should never be only your personal quest.

Before starting out automation, get commitment from your team and organization. Define expected goals and scope for automation together, and include these in your team DoD (Definition of Done). Work together as a team, with support of both developers and testers, to achieve your goals.

Make sure the results of automated tests are always up-to-date and available to your team and other stakeholders so they have the latest information to support development decisions. Automate only tests that provide meaningful information; there’s no point in automating things that nobody cares about.

Solid base

Most testers starting to dabble with automation have their first experience usually with UI (GUI) automation tools. In web context, it’s commonly Selenium WebDriver or some test framework using Selenium under the hood. It makes perfect sense: as a tester you obviously want to test the whole application end-to-end, with all nuts and bolts installed. And Selenium is a great tool for that with plenty of resources and examples to get started.

Alas, eventually you learn UI tests can be one of the most problematic tests to automate. First of all, they can be problematic regarding the project schedule. Testing the product via UI means you’ll need a working UI. However, that is often subject to continuous changes, fine-tuning and may be finalized in the very end of the project. Sometimes even the smallest UI changes can break your automation scripts, requiring excessive maintenance and refactoring work to keep up with the latest UI design. Secondly, implementation of UI based end-to-end tests with whole system in place have a tendency of being slower to execute and more brittle as there are more moving parts. Setting up test environment and test development is also usually more costly as there are more parts to configure and manage.

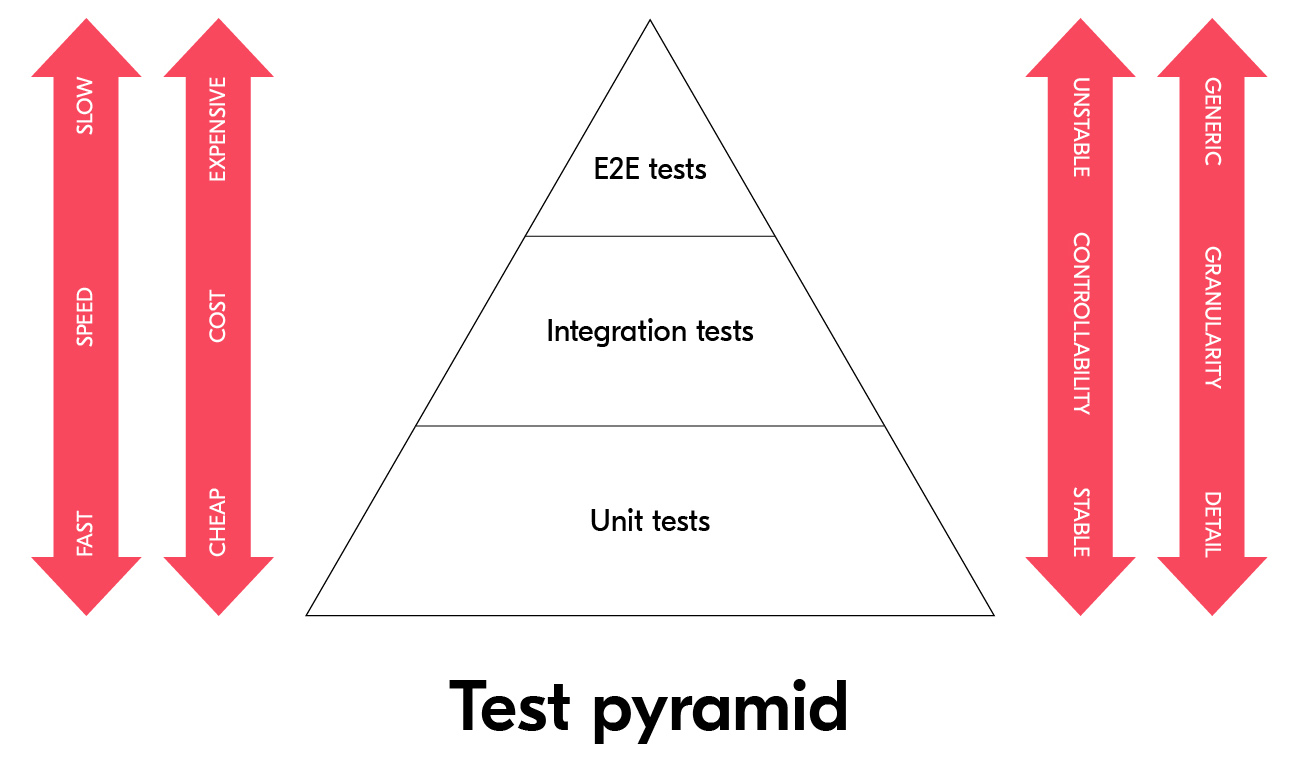

Often teams put too much value on testing everything end-to-end in realistic environment with production UI. Aim to start automation as early as possible in the scope the functionality is implemented. Shift left. Have plenty of precise tests with detailed granularity, keep feedback loop fast from testing to development, and aim to develop tests that are robust to unrelated changes. Work your way up having relevant tests in each level, all the way from single units, to APIs, and finally to the UI layer. Usually this approach is described as a test pyramid, an idea initially coined by Mike Cohn and few others, where you should have solid base of unit and integration tests checking most of the things to be covered in testing.

The test pyramid concept is also somewhat controversial and subject of numerous discussions in the community. My personal opinion is to use it as guideline, but not to take it too strictly as rule that can’t be broken. It’s not automatically wrong for example to have more end-to-end tests than integration tests automated. Also the cost and effort needed for solid unit test coverage can surprise in context where exhaustive use of mocking is required – test execution speed does not reflect the test implementation speed. Aim for shift left, don’t limit only to testing in one level, and adapt your way of working to find right balance of tests in your domain and context.

Don’t fall in love with your code

It’s not uncommon to see test assets filled with obsolete information such unused classes and methods, commented-out code lines, outdated configuration files and obsolete test cases.

Having obsolete code and test data makes the project hard to learn, follow and work with. Enforce good coding practices keeping the code base clean of obsolete and deprecated code that can become source for misinformation and technical debt. Create daily habit to cleanup obsolete test assets from repository.

To be continued

These were the first five tips to get started with test automation. They focused more on the general aspects of the automation process while the next five tips will be about issues to take into consideration in test implementation. They will be published soon in the second part of this post.

Happy testing.